Graphics drivers in Flatpak have been a bit of a pain point. The drivers have to be built against the runtime to work in the runtime. This usually isn’t much of an issue but it breaks down in two cases:

-

If the driver depends on a specific kernel version

-

If the runtime is end-of-life (EOL)

The first issue is what the proprietary Nvidia drivers exhibit. A specific user space driver requires a specific kernel driver. For drivers in Mesa, this isn’t an issue. In the medium term, we might get lucky here and the Mesa-provided Nova driver might become competitive with the proprietary driver. Not all hardware will be supported though, and some people might need CUDA or other proprietary features, so this problem likely won’t go away completely.

Currently we have runtime extensions for every Nvidia driver version which gets matched up with the kernel version, but this isn’t great.

The second issue is even worse, because we don’t even have a somewhat working solution to it. A runtime which is EOL doesn’t receive updates, and neither does the runtime extension providing GL and Vulkan drivers. New GPU hardware just won’t be supported and the software rendering fallback will kick in.

How we deal with this is rather primitive: keep updating apps, don’t depend on EOL runtimes. This is in general a good strategy. A EOL runtime also doesn’t receive security updates, so users should not use them. Users will be users though and if they have a goal which involves running an app which uses an EOL runtime, that’s what they will do. From a software archival perspective, it is also desirable to keep things working, even if they should be strongly discouraged.

In all those cases, the user most likely still has a working graphics driver, just not in the flatpak runtime, but on the host system. So one naturally asks oneself: why not just use that driver?

That’s a load-bearing “just”. Let’s explore our options.

Exploration

Attempt #1: Bind mount the drivers into the runtime.

Cool, we got the driver’s shared libraries and ICDs from the host in the runtime. If we run a program, it might work. It might also not work. The shared libraries have dependencies and because we are in a completely different runtime than the host, they most likely will be mismatched. Yikes.

Attempt #2: Bind mount the dependencies.

We got all the dependencies of the driver in the runtime. They are satisfied and the driver will work. But your app most likely won’t. It has dependencies that we just changed under its nose. Yikes.

Attempt #3: Linker magic.

Until here everything is pretty obvious, but it turns out that linkers are actually quite capable and support what’s called

linker namespaces

. In a single process one can load two completely different sets of shared libraries which will not interfere with each other. We can bind mount the host shared libraries into the runtime, and

dlmopen

the driver into its own namespace. This is exactly what

libcapsule

does. It does have some issues though, one being that the libc can’t be loaded into multiple linker namespaces because it manages global resources. We can use the runtime’s libc, but the host driver might require a newer libc. We can use the host libc, but now we contaminate the apps linker namespace with a dependency from the host.

Attempt #4: Virtualization.

All of the previous attempts try to load the host shared objects into the app. Besides the issues mentioned above, this has a few more fundamental issues:

-

The Flatpak runtimes support i386 apps; those would require a i386 driver on the host, but modern systems only ship amd64 code.

-

We might want to support emulation of other architectures later

-

It leaks an awful lot of the host system into the sandbox

-

It breaks the strict separation of the host system and the runtime

If we avoid getting code from the host into the runtime, all of those issues just go away, and GPU virtualization via Virtio-GPU with Venus allows us to do exactly that.

The VM uses the Venus driver to record and serialize the Vulkan commands, sends them to the hypervisor via the virtio-gpu kernel driver. The host uses virglrenderer to deserializes and executes the commands.

This makes sense for VMs, but we don’t have a VM, and we might not have the virtio-gpu kernel module, and we might not be able to load it without privileges. Not great.

It turns out however that the developers of virglrenderer also don’t want to have to run a VM to run and test their project and thus added vtest, which uses a unix socket to transport the commands from the mesa Venus driver to virglrenderer.

It also turns out that I’m not the first one who noticed this, and there is some glue code which

allows Podman to make use of virgl

.

You can most likely test this approach right now on your system by running two commands:

rendernodes=(/dev/dri/render*)

virgl_test_server --venus --use-gles --socket-path /tmp/flatpak-virgl.sock --rendernode "${rendernodes[0]}" &

flatpak run --nodevice=dri --filesystem=/tmp/flatpak-virgl.sock --env=VN_DEBUG=vtest --env=VTEST_SOCKET_NAME=/tmp/flatpak-virgl.sock org.gnome.clocks

If we integrate this well, the existing driver selection will ensure that this virtualization path is only used if there isn’t a suitable driver in the runtime.

Implementation

Obviously the commands above are a hack. Flatpak should automatically do all of this, based on the availability of the

dri

permission.

We actually already start a host program and stop it when the app exits:

xdg-dbus-proxy

. It’s a bit involved because we have to wait for the program (in our case

virgl_test_server

) to provide the service before starting the app. We also have to shut it down when the app exits, but flatpak is not a supervisor. You won’t see it in the output of

ps

because it just execs bubblewrap (

bwrap

) and ceases to exist before the app even started. So instead we have to use the kernel’s automatic cleanup of kernel resources to signal to

virgl_test_server

that it is time to shut down.

The way this is usually done is via a so called

sync fd

. If you have a pipe and poll the file descriptor of one end, it becomes readable as soon as the other end writes to it, or the file description is closed. Bubblewrap supports this kind of

sync fd

: you can hand in a one end of a pipe and it ensures the kernel will close the fd once the app exits.

One small problem: only one of those

sync fds

is supported in

bwrap

at the moment, but we can add support for multiple in

Bubblewrap

and

Flatpak

.

For waiting for the service to start, we can reuse the same pipe, but write to the other end in the service, and wait for the fd to become readable in Flatpak, before exec’ing bwrap with the same fd. Also

not too much code

.

Finally, virglrenderer needs to learn how to use a

sync fd

. Also

pretty trivial

. There is an older MR which adds

something similar

for the Podman hook, but it misses the code which allows Flatpak to wait for the service to come up, and it never got merged.

Overall, this is pretty straight forward.

Conclusion

The virtualization approach should be a robust fallback for all the cases where we don’t get a working GPU driver in the Flatpak runtime, but there are a bunch of issues and unknowns as well.

It is not entirely clear how forwards and backwards compatible vtest is, if it even is supposed to be used in production, and if it provides a strong security boundary.

None of that is a fundamental issue though and we could work out those issues.

It’s also not optimal to start

virgl_test_server

for every Flatpak app instance.

Given that we’re trying to move away from blanket

dri

access to a more granular and dynamic access to GPU hardware via a

new daemon

, it might make sense to use this new daemon to start the

virgl_test_server

on demand and only for allowed devices.

chevron_right

chevron_right

Photo by Tetsuji Koyama, licensed under CC BY 4.0

Photo by Tetsuji Koyama, licensed under CC BY 4.0

Photo by Tetsuji Koyama, licensed under CC BY 4.0

Photo by Tetsuji Koyama, licensed under CC BY 4.0

Photo by Tetsuji Koyama, licensed under CC BY 4.0

Photo by Tetsuji Koyama, licensed under CC BY 4.0

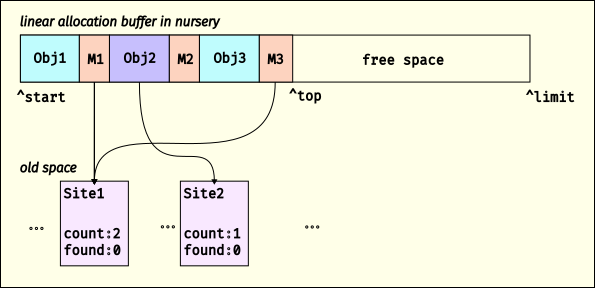

A linear allocation buffer containing objects allocated with allocation mementos

A linear allocation buffer containing objects allocated with allocation mementos