-

chevron_right

chevron_right

Ignacy Kuchciński: Digital Wellbeing Contract: Conclusion

news.movim.eu / PlanetGnome • 20 January 2026 • 2 minutes

A lot of progress has been made since my last Digital Wellbeing update two months ago. That post covered the initial screen time limits feature, which was implemented in the Parental Controls app, Settings and GNOME Shell. There’s a screen recording in the post, created with the help of a custom GNOME OS image, in case you’re interested.

Finishing Screen Time Limits

After implementing the major framework for the rest of the code in GNOME Shell, we added the mechanism in the lock screen to prevent children from unlocking when the screen time limit is up. Parents are now also able to extend the session limit temporarily , so that the child can use the computer until the rest of the day.

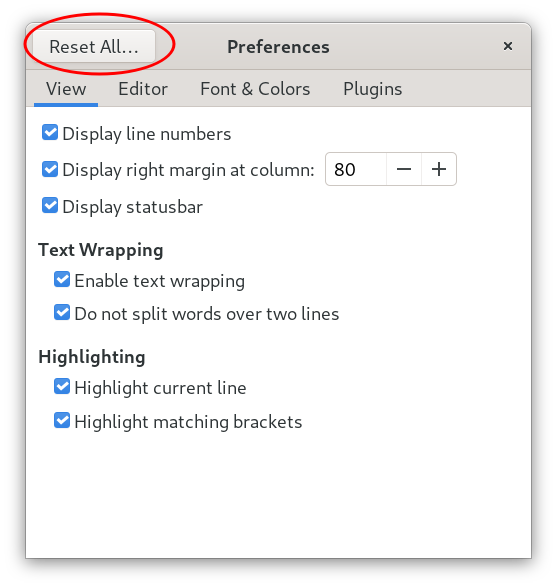

Parental Controls Shield

Screen time limits can be set as either a daily limit or a bedtime. With the work that has recently landed, when the screen time limit has been exceeded, the session locks and the authentication action is hidden on the lock screen. Instead, a message is displayed explaining that the current session is limited and the child cannot login. An “Ignore” button is presented to allow the parents to temporarily lift the restrictions when needed.

Parental Controls shield on the lock screen, preventing the children from unlocking

Parental Controls shield on the lock screen, preventing the children from unlocking

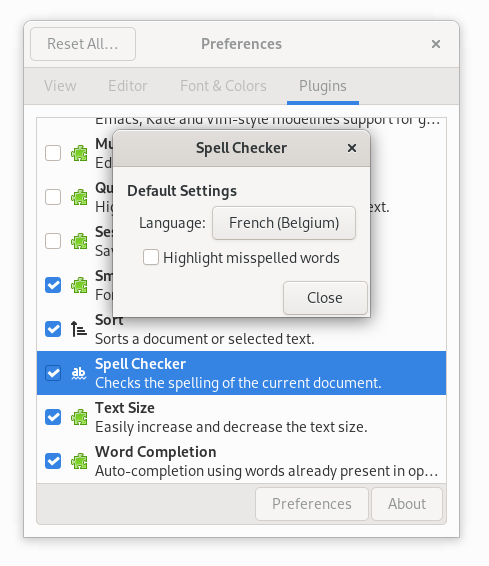

Extending Screen Time

Clicking the “Ignore” button prompts the user for authentication from a user with administrative privileges. This allows parents to temporarily lift the screen time limit, so that the children may log in as normal until the rest of the day.

Authentication dialog allowing the parents to temporarily override the Screen Time restrictions

Authentication dialog allowing the parents to temporarily override the Screen Time restrictions

Showcase

Continuing the screen cast of the Shell functionality from the previous update, I’ve recorded the parental controls shield together, and showed the extending screen time functionality:

GNOME OS Image

You can also try the feature out for yourself, with the very same

GNOME OS live image

I’ve used in the recording, that you can either run in

GNOME Boxes

, or

try on your hardware

if you know what you’re doing

Conclusion

Now that the full Screen Time Limits functionality has been merged in GNOME Shell, this concludes my part in the Digital Wellbeing Contract. Here’s the summary of the work:

- We’ve redesigned the Parental Controls app and updated it to use modern GNOME technologies

- New features was added, such as Screen Time monitoring and setting limits: daily limit and bedtime schedule

- GNOME Settings gained Parental Controls integration, to helpfully inform the user about the existence of the limits

- We introduced the screen time limits in GNOME Shell, locking childrens’ sessions once they reach their limit. Children are then prevented from unlocking until the next day, unless parents extend their screen time

In the initial plan, we also covered web filtering, and the foundation of the feature has been introduced as well. However, integrating the functionality in the Parental Controls application has been postponed to a future endeavour.

I’d like to thank GNOME Foundation for giving me this opportunity, and Endless for sponsoring the work. Also kudos to my colleagues, Philip Withnall and Sam Hewitt, it’s been great to work with you and I’ve learned a lot (like the importance of wearing Christmas sweaters in work meetings!), and to Florian Müllner, Matthijs Velsink and Felipe Borges for very helpful reviews. I also want to thank Allan Day for organizing the work hours and meetings, and helping with my blog posts as well

Until next project!

Until next project!