-

chevron_right

chevron_right

Andy Wingo: the last couple years in v8's garbage collector

news.movim.eu / PlanetGnome • 13 November 2025 • 9 minutes

Let’s talk about memory management! Following up on my article about 5 years of developments in V8’s garbage collector , today I’d like to bring that up to date with what went down in V8’s GC over the last couple years.

methodololology

I selected all of the commits to src/heap since my previous roundup. There were 1600 of them, including reverts and relands. I read all of the commit logs, some of the changes, some of the linked bugs, and any design document I could get my hands on. From what I can tell, there have been about 4 FTE from Google over this period, and the commit rate is fairly constant. There are very occasional patches from Igalia, Cloudflare, Intel, and Red Hat, but it’s mostly a Google affair.

Then, by the very rigorous process of, um, just writing things down and thinking about it, I see three big stories for V8’s GC over this time, and I’m going to give them to you with some made-up numbers for how much of the effort was spent on them. Firstly, the effort to improve memory safety via the sandbox: this is around 20% of the time. Secondly, the Oilpan odyssey: maybe 40%. Third, preparation for multiple JavaScript and WebAssembly mutator threads: 20%. Then there are a number of lesser side quests: heuristics wrangling (10%!!!!), and a long list of miscellanea. Let’s take a deeper look at each of these in turn.

the sandbox

There was a nice blog post in June last year summarizing the sandbox effort : basically, the goal is to prevent user-controlled writes from corrupting memory outside the JavaScript heap. We start from the assumption that the user is somehow able to obtain a write-anywhere primitive, and we work to mitigate the effect of such writes. The most fundamental way is to reduce the range of addressable memory, notably by encoding pointers as 32-bit offsets and then ensuring that no host memory is within the addressable virtual memory that an attacker can write. The sandbox also uses some 40-bit offsets for references to larger objects, with similar guarantees. (Yes, a sandbox really does reserve a terabyte of virtual memory).

But there are many, many details. Access to external objects is intermediated via type-checked external pointer tables . Some objects that should never be directly referenced by user code go in a separate “trusted space”, which is outside the sandbox. Then you have read-only spaces, used to allocate data that might be shared between different isolates, you might want multiple cages , there are “shared” variants of the other spaces, for use in shared-memory multi-threading, executable code spaces with embedded object references, and so on and so on. Tweaking, elaborating, and maintaining all of these details has taken a lot of V8 GC developer time.

I think it has paid off, though, because the new development is that V8 has managed to turn on hardware memory protection for the sandbox : sandboxed code is prevented by the hardware from writing memory outside the sandbox.

Leaning into the “attacker can write anything in their address space” threat model has led to some funny patches. For example, sometimes code needs to check flags about the page that an object is on, as part of a write barrier. So some GC-managed metadata needs to be in the sandbox. However, the garbage collector itself, which is outside the sandbox, can’t trust that the metadata is valid. We end up having two copies of state in some cases: in the sandbox, for use by sandboxed code, and outside, for use by the collector.

The best and most amusing instance of this phenomenon is related to integers. Google’s style guide recommends signed integers by default , so you end up with on-heap data structures with int32_t len and such. But if an attacker overwrites a length with a negative number, there are a couple funny things that can happen. The first is a sign-extending conversion to size_t by run-time code , which can lead to sandbox escapes. The other is mistakenly concluding that an object is small, because its length is less than a limit, because it is unexpectedly negative . Good times!

oilpan

It took 10 years for Odysseus to get back from Troy, which is about as long as it has taken for conservative stack scanning to make it from Oilpan into V8 proper. Basically, Oilpan is garbage collection for C++ as used in Blink and Chromium. Sometimes it runs when the stack is empty; then it can be precise. But sometimes it runs when there might be references to GC-managed objects on the stack; in that case it runs conservatively.

Last time I described how V8 would like to add support for generational garbage collection to Oilpan , but that for that, you’d need a way to promote objects to the old generation that is compatible with the ambiguous references visited by conservative stack scanning. I thought V8 had a chance at success with their new mark-sweep nursery , but that seems to have turned out to be a lose relative to the copying nursery. They even tried sticky mark-bit generational collection , but it didn’t work out . Oh well; one good thing about Google is that they seem willing to try projects that have uncertain payoff, though I hope that the hackers involved came through their OKR reviews with their mental health intact.

Instead, V8 added support for pinning to the Scavenger copying nursery implementation . If a page has incoming ambiguous edges, it will be placed in a kind of quarantine area for a while. I am not sure what the difference is between a quarantined page, which logically belongs to the nursery, and a pinned page from the mark-compact old-space; they seem to require similar treatment. In any case, we seem to have settled into a design that was mostly the same as before, but in which any given page can opt out of evacuation-based collection.

What do we get out of all of this? Well, not only can we get generational collection for Oilpan, but also we unlock cheaper, less bug-prone “direct handles” in V8 itself.

The funny thing is that I don’t think any of this is shipping yet; or, if it is, it’s only in a Finch trial to a minority of users or something. I am looking forward in interest to seeing a post from upstream V8 folks; whole doctoral theses have been written on this topic , and it would be a delight to see some actual numbers.

shared-memory multi-threading

JavaScript implementations have had the luxury of a single-threadedness: with just one mutator, garbage collection is a lot simpler. But this is ending. I don’t know what the state of shared-memory multi-threading is in JS , but in WebAssembly it seems to be moving apace , and Wasm uses the JS GC. Maybe I am overstating the effort here—probably it doesn’t come to 20%—but wiring this up has been a whole thing .

I will mention just one patch here that I found to be funny. So with pointer compression, an object’s fields are mostly 32-bit words, with the exception of 64-bit doubles, so we can reduce the alignment on most objects to 4 bytes. V8 has had a bug open forever about alignment of double-holding objects that it mostly ignores via unaligned loads.

Thing is, if you have an object visible to multiple threads, and that object might have a 64-bit field, then the field should be 64-bit aligned to prevent tearing during atomic access, which usually means the object should be 64-bit aligned. That is now the case for Wasm structs and arrays in the shared space.

side quests

Right, we’ve covered what to me are the main stories of V8’s GC over the past couple years. But let me mention a few funny side quests that I saw.

the heuristics two-step

This one I find to be hilariousad. Tragicomical. Anyway I am amused. So any real GC has a bunch of heuristics: when to promote an object or a page, when to kick off incremental marking, how to use background threads, when to grow the heap, how to choose whether to make a minor or major collection, when to aggressively reduce memory, how much virtual address space can you reasonably reserve, what to do on hard out-of-memory situations, how to account for off-heap mallocated memory, how to compute whether concurrent marking is going to finish in time or if you need to pause... and V8 needs to do this all in all its many configurations, with pointer compression off or on, on desktop, high-end Android, low-end Android, iOS where everything is weird, something called Starboard which is apparently part of Cobalt which is apparently a whole new platform that Youtube uses to show videos on set-top boxes, on machines with different memory models and operating systems with different interfaces, and on and on and on. Simply tuning the system appears to involve a dose of science, a dose of flailing around and trying things, and a whole cauldron of witchcraft. There appears to be one person whose full-time job it is to implement and monitor metrics on V8 memory performance and implement appropriate tweaks. Good grief!

mutex mayhem

Toon Verwaest noticed that V8 was exhibiting many more context switches on MacOS than Safari, and identified V8’s use of platform mutexes as the problem. So he rewrote them to use os_unfair_lock on MacOS. Then implemented adaptive locking on all platforms . Then... removed it all and switched to abseil .

Personally, I am delighted to see this patch series, I wouldn’t have thought that there was juice to squeeze in V8’s use of locking. It gives me hope that I will find a place to do the same in one of my projects :)

ta-ta, third-party heap

It used to be that MMTk was trying to get a number of production language virtual machines to support abstract APIs so that MMTk could slot in a garbage collector implementation. Though this seems to work with OpenJDK, with V8 I think the churn rate and laser-like focus on the browser use-case makes an interstitial API abstraction a lose. V8 removed it a little more than a year ago .

fin

So what’s next? I don’t know; it’s been a while since I have been to Munich to drink from the source. That said, shared-memory multithreading and wasm effect handlers will extend the memory management hacker’s full employment act indefinitely, not to mention actually landing and shipping conservative stack scanning. There is a lot to be done in non-browser V8 environments, whether in Node or on the edge, but it is admittedly harder to read the future than the past.

In any case, it was fun taking this look back, and perhaps I will have the opportunity to do this again in a few years. Until then, happy hacking!

OpenAlt’s AV background with running streams. Author: Michal Stanke.

OpenAlt’s AV background with running streams. Author: Michal Stanke.

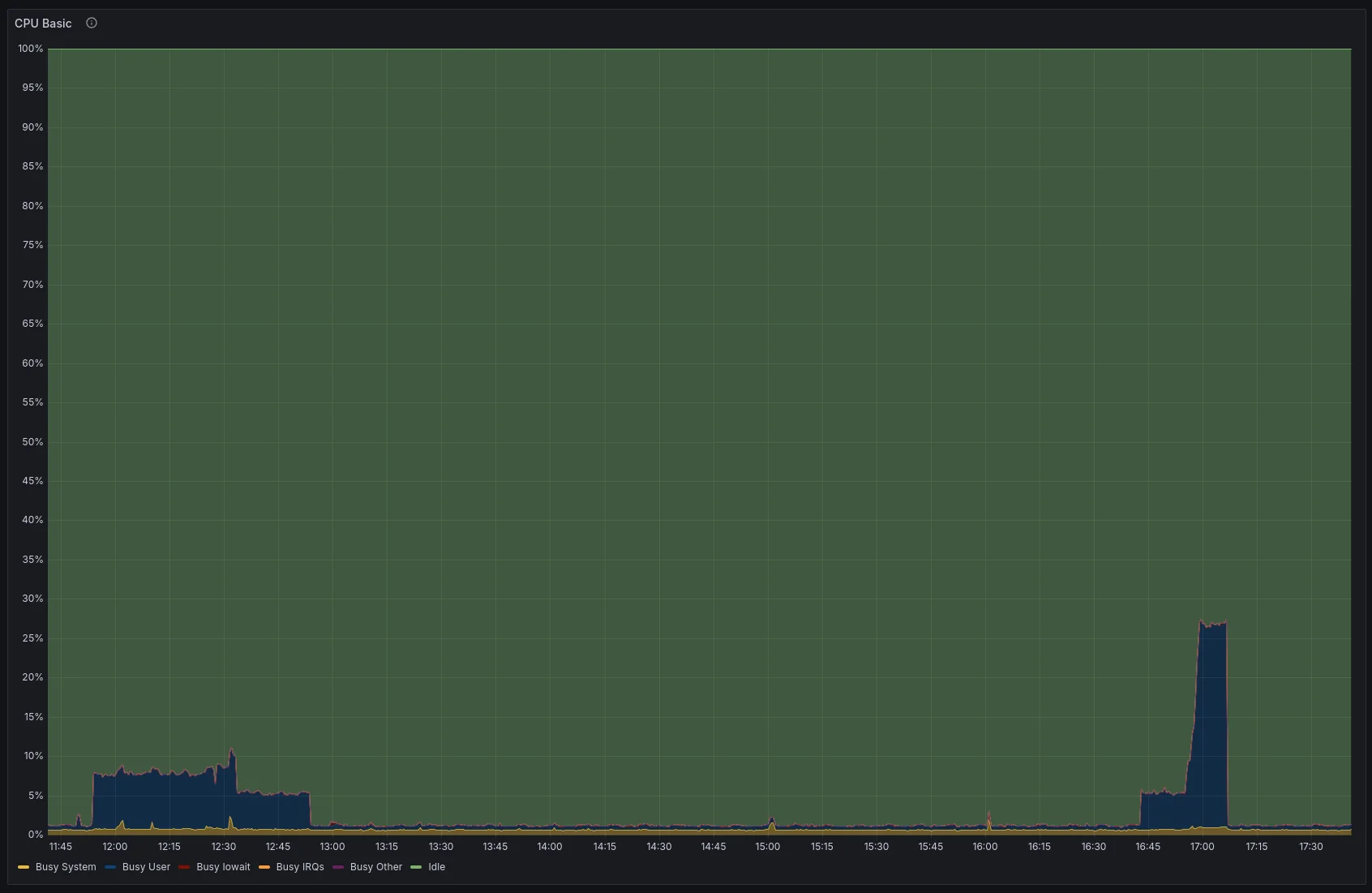

Load on the runner server during the stress test. Author: Adam Štrauch.

Load on the runner server during the stress test. Author: Adam Štrauch.

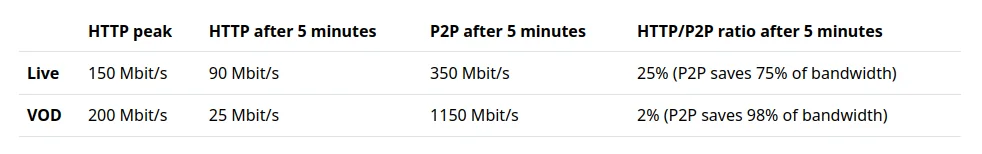

In practice, the server data download savings were large even with just 5 peers on a single stream and resolution.

In practice, the server data download savings were large even with just 5 peers on a single stream and resolution.

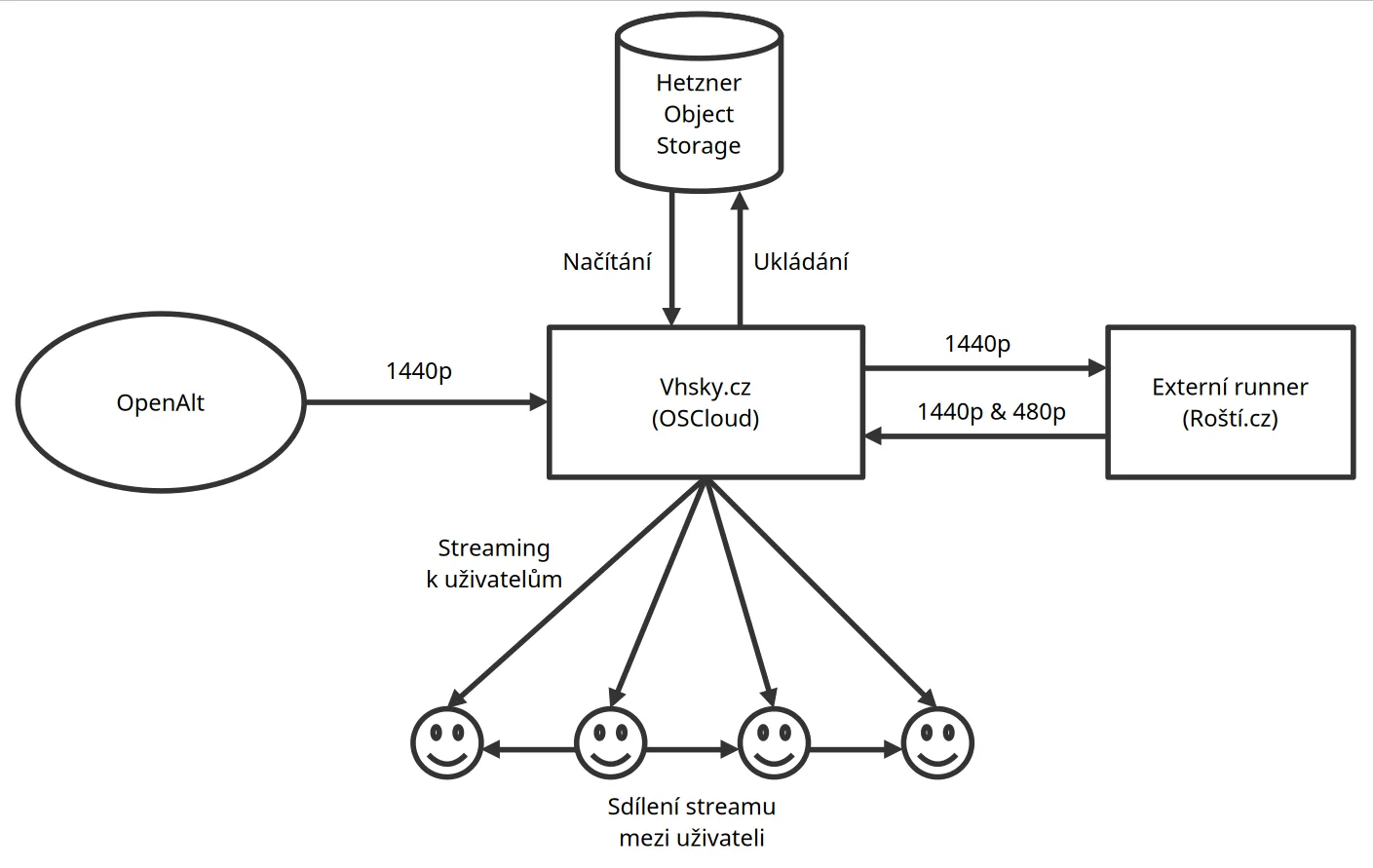

Diagram of the streaming solution for OpenAlt. Labels in Czech, but quite self-explanatory.

Diagram of the streaming solution for OpenAlt. Labels in Czech, but quite self-explanatory.

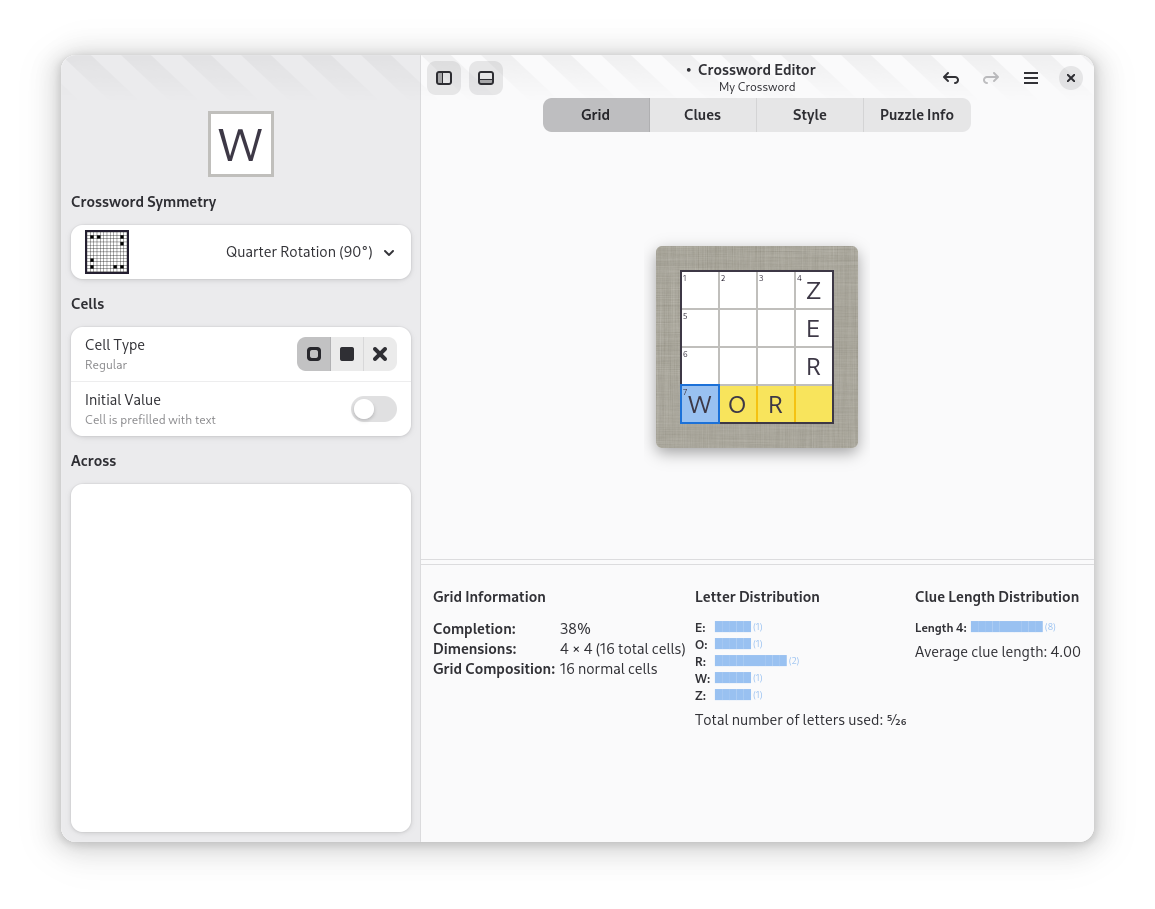

Child account view with added screen time overview and action for more options

Child account view with added screen time overview and action for more options

Screen Time page with detailed screen time records and time limit controls

Screen Time page with detailed screen time records and time limit controls

Wellbeing panel with a banner informing that limits can only be changed in Parental Controls

Wellbeing panel with a banner informing that limits can only be changed in Parental Controls

A GitHub contribution graph, showing a lot of activity in the past three weeks after virtually none the rest of the year.

A GitHub contribution graph, showing a lot of activity in the past three weeks after virtually none the rest of the year.

.

.