-

chevron_right

chevron_right

Sophie Herold: GNOME in 2025: Some Numbers

news.movim.eu / PlanetGnome • 27 December 2025 • 3 minutes

As some of you know, I like aggregating data. So here are some random numbers about GNOME in 2025. This post is not about making any point with the numbers I’m sharing. It’s just for fun.

So, what is GNOME? In total, 6 692 516 lines of code. Of that, 1 611 526 are from apps. The remaining 5 080 990 are in libraries and other components, like the GNOME Shell. These numbers cover “the GNOME ecosystem,” that is, the combination of all Core, Development Tools, and Circle projects. This currently includes exactly 100 apps. We summarize everything that’s not an app under the name “components.”

GNOME 48 was at least 90 % translated for 33 languages. In GNOME 49 this increased to 36 languages. That’s a record in the data that I have, going back to GNOME 3.36 in 2020. The languages besides American English are: Basque, Brazilian Portuguese, British English, Bulgarian, Catalan, Chinese (China), Czech, Danish, Dutch, Esperanto, French, Galician, Georgian, German, Greek, Hebrew, Hindi, Hungarian, Indonesian, Italian, Lithuanian, Persian, Polish, Portuguese, Romanian, Russian, Serbian, Serbian (Latin), Slovak, Slovenian, Spanish, Swedish, Turkish, Uighur, and Ukrainian. There are 19 additional languages that are translated 50 % or more. So maybe you can help with translating GNOME to Belarusian, Catalan (Valencian), Chinese (Taiwan), Croatian, Finnish, Friulian, Icelandic, Japanese, Kazakh, Korean, Latvian, Malay, Nepali, Norwegian Bokmål, Occitan, Punjabi, Thai, Uzbek (Latin), or Vietnamese in 2026?

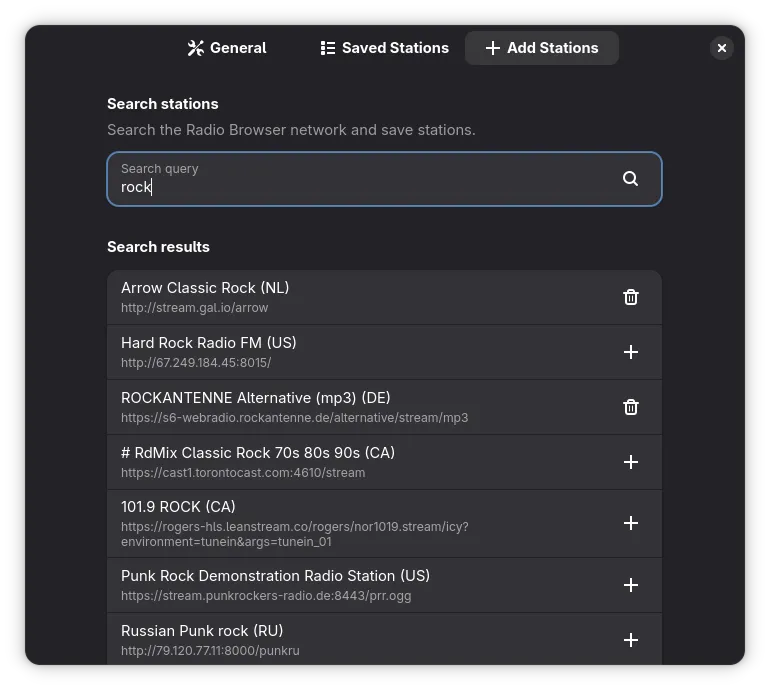

Talking about languages. What programming languages are used in GNOME? Let’s look at GNOME Core apps first. Almost half of all apps are written in C. Note that for these data, we are counting TypeScript under JavaScript.

Share of GNOME Core apps by programming language.

Share of GNOME Core apps by programming language.

The language distribution for GNOME Circle apps looks quite different with Rust (41.7 %), and Python (29.2 %) being the most popular languages.

Share of GNOME Circle apps by programming language.

Share of GNOME Circle apps by programming language.

Overall, we can see that with C, JavaScript/TypeScript, Python, Rust, and Vala, there are five programming languages that are commonly used for app development within the GNOME ecosystem.

But what about components within GNOME? The default language for libraries is still C. More than three-quarters of the lines of code for components are written in it. The components with the largest codebase are GTK (820 000), GLib (560 000), and Mutter (390 000).

Lines of code for components within the GNOME ecosystem.

Lines of code for components within the GNOME ecosystem.

But what about the remaining quarter? Line of code are of course a questionable metric. For Rust, close to 400 000 lines of code are actually bindings for libraries. The majority of this code is automatically generated. Similarly, 100 000 lines of Vala code are in the Vala repository itself. But there are important components within GNOME that are not written in C: Orca, our screen reader, boasts 110 000 lines of Python code. Half of GNOME Shell is written in JavaScript, adding 65 000 lines of JavaScript code. Librsvg and glycin are libraries written in Rust, that also provide bindings to other languages.

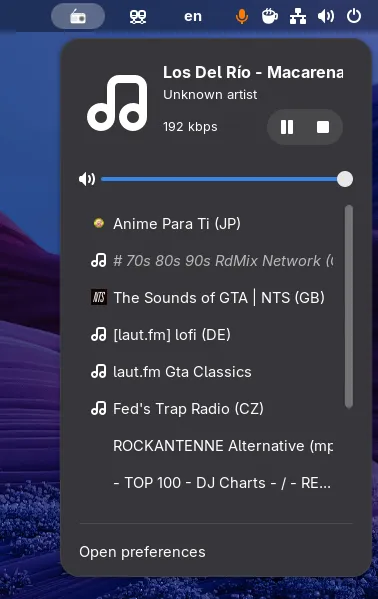

We are slowly approaching the end of the show. Let’s take a look at the GNOME Circle apps most popular on Flathub. I don’t trust the installation statistics on Flathub, since I have seen indications that for some apps, the number of installations is surprisingly high and cyclic. My guess is that some Linux distribution is installing these apps regularly as part of their test pipeline. Therefore, we instead check how many people have installed the latest update for the app. Not a perfect number either, but something that looks much more reliable. The top five apps are: Blanket, Eyedropper, Newsflash, Fragments, and Shortwave. Sometimes, it needs less than 2 000 lines of code to create popular software.

And there are 862 people supporting the GNOME Foundation with a recurring donation. Will you join them for 2026 on donate.gnome.org ?

Every time I get stuck, I’m forced to read more carefully. Every time I ask a question, I learn something I wouldn’t have learned alone. Every mistake shows me how the system actually works, not how I assumed it worked. The struggle is uncomfortable, yes, but it’s also doing real work on me.

Every time I get stuck, I’m forced to read more carefully. Every time I ask a question, I learn something I wouldn’t have learned alone. Every mistake shows me how the system actually works, not how I assumed it worked. The struggle is uncomfortable, yes, but it’s also doing real work on me.