-

chevron_right

The XMPP Standards Foundation: The XMPP Newsletter April 2022

news.movim.eu / PlanetJabber • 5 May, 2022 • 8 minutes

Welcome to the XMPP Newsletter, great to have you here again! This issue covers the month of April 2022.

Like this newsletter, many projects and their efforts in the XMPP community are a result of people’s voluntary work. If you are happy with the services and software you may be using, especially throughout the current situation, please consider saying thanks or help these projects! Interested in supporting the Newsletter team? Read more at the bottom.

Newsletter translations

This is a community effort, and we would like to thank translators for their contributions. Volunteers are welcome! Translations of the XMPP Newsletter will be released here (with some delay):

- French: jabberfr.org and linuxfr.org

- German: xmpp.org and anoxinon.de

- Italian: nicfab.it

- Spanish: xmpp.org

XSF Announcements

- The XSF has been accepted as hosting organization at Google Summer of Code 2022 (GSoC) .

- XMPP Newsletter via mail: We migrated to our own server for a mailing list in order to move away from Tinyletter. It is a read-only list on which, once you subscribe to it, you will receive the XMPP Newsletter on a monthly basis. We moved away from Tinyletter due to privacy concerns.

- By the way, have you checked our nice XMPP RFC page ? :-)

XSF fiscal hosting projects

The XSF offers fiscal hosting for XMPP projects. Please apply via Open Collective . For more information, see the announcement blog post . Current projects:

XMPP Community Projects

A new community space for XMPP related projects and individuals has been created in the Fediverse! Join us on our new Lemmy instance and chat about all XMPP things!

Are you looking for an XMPP provider that suits you? There is a new website based on the data of XMPP Providers . XMPP Providers has a curated list of providers and tools for filtering and creating badges for them. The machine-readable list of providers can be integrated in XMPP clients to simplify the registration. You can help by improving your website (as a provider), by automating the manual tasks (as a developer), and by adding new providers to the list (as an interested contributor). Read the first blog post !

Events

- XMPP Office Hours : available on our YouTube channel

- Berlin XMPP Meetup (remote) : monthly meeting of XMPP enthusiasts in Berlin, every 2nd Wednesday of the month

Talks

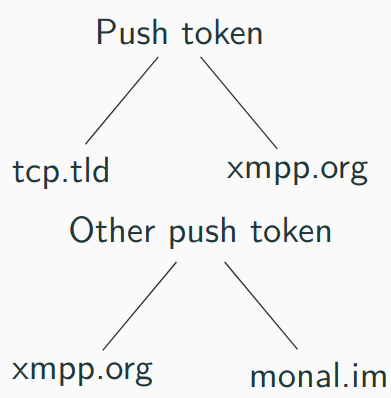

Thilo Molitor presented their new even more privacy friendly push design in Monal at the Berlin XMPP Meetup!

Articles

The Mellium Dev Communiqué for April 2022 has been released and can be found over on Open Collective .

Maxime “pep.” Buquet wrote some thoughts regarding “Deal on Digital Markets Act: EU rules to ensure fair competition and more choice for users” in his Interoperability in a “Big Tech” world article. In a later article he describes part of his threat model , detailing how XMPP comes into play and proposing ways it could be improved.

German “Freie Messenger” shares some thoughts on interoperability and the Digital Markets Act (DMA). They also offer a comparison of “XMPP/Matrix”

Software news

Clients and applications

BeagleIM

5.2

and

SiskinIM

7.2

just got released with fixes for OMEMO encrypted message in MUC channels, MUC participants disappearing randomly, and issues with VoIP call sending an incorrect payload during call negotiation.

converse.js published version

9.1.0

. It comes with a new dark theme, several improvements for encryption (OMEMO), an improved stanza timeout, font icons, updated translations, and enhancements of the IndexedDB. Find more in the

release notes

.

Gajim Development News : This month came with a lot of preparations for the release of Gajim 1.4 🚀 Gajim’s release pipeline has been improved in many ways, allowing us to make releases more frequently. Furthermore, April brought improvements for file previews on Windows.

Go-sendxmpp

version

v0.4.0

with experimental Ox (OpenPGP for XMPP) support has been released.

JMP offers international call rates based on a computing trie . There are also new commands and team members .

Monal

5.1

has been released

. This release brings OMEMO support in private group chats, communication notifications on iOS 15, and many improvements.

PravApp project is a plan to get a lot of people from India to invest small amounts to run an interoperable XMPP-based messaging service that is easier to join and discover contacts, similar to the Quicksy app. Prav will be Free Software, which respects users' freedom. The service will be backed by a cooperative society in India to ensure democratic decision making in which users can take part as well. Users will control the privacy policy of the service.

Psi+

1.5.1619

(2022-04-09)

has been released.

Poezio

0.14

has been released alongside with multiple backend libraries. This new release brings in lots of bug fixes and small improvements. Big changes are coming, read more in the article.

Profanity

0.12.1

has been released, which brings some bug fixes.

UWPX ships two small pre-release updates concering a critical fix for a crash that occurs when trying to render an invalid user avatar and issues with the Windows Store builds . Besides that it also got a minor UI update this month.

Servers

Ignite Realtime Community:

-

Version

9.1.0release 1 of the Openfire inVerse plugin has been released, which enables deployment of the third-party Converse client 37 in Openfire. -

Version

4.4.0release 1 of the Openfire JSXC plugin has been released, which enables deployment the third-party JSXC client 13 in Openfire. -

Version

1.2.3of the Openfire Message of the Day plugin has been released, and it ships with German translations for the admin console -

Version

1.8.0of the Openfire REST API plugin has been released, which adds new endpoints for readiness, liveliness and cluster status.

Libraries

slixmpp

1.8.2

has been released. It fixes RFC3920 sessions, improves certificate errors handling, and adds a plugin for XEP-0454 (OMEMO media sharing).

The mellium.im/xmpp library

v0.21.2

has been released! Highlights include support for

PEP Native Bookmarks

, and

entity capabilities

. For more information, see the

release announcement

.

Extensions and specifications

Developers and other standards experts from around the world collaborate on these extensions, developing new specifications for emerging practices, and refining existing ways of doing things. Proposed by anybody, the particularly successful ones end up as Final or Active - depending on their type - while others are carefully archived as Deferred. This life cycle is described in XEP-0001 , which contains the formal and canonical definitions for the types, states, and processes. Read more about the standards process . Communication around Standards and Extensions happens in the Standards Mailing List ( online archive ).

By the way, xmpp.org features a new page about XMPP RFCs .

Proposed

The XEP development process starts by writing up an idea and submitting it to the XMPP Editor. Within two weeks, the Council decides whether to accept this proposal as an Experimental XEP.

-

Pubsub Public Subscriptions

- This specification provides a way to make subscriptions to a node public

-

Ephemeral Messages

- This specification encourages a shift in privacy settings wrt. logging policies.

New

- No new XEPs this month.

Deferred

If an experimental XEP is not updated for more than twelve months, it will be moved off Experimental to Deferred. If there is another update, it will put the XEP back onto Experimental.

- No XEPs deferred this month.

Updated

-

Version 0.4 of

XEP-0356

(Privileged Entity)

- Add “iq” privilege (necessary to implement XEPs such as Pubsub Account Management ( XEP-0376 )).

- Roster pushes are now transmitted to privileged entity with “roster” permission of “get” or “both”. This can be disabled.

- Reformulate to specify than only initial stanza and “unavailable” stanzas are transmitted with “presence” pemission.

- Namespace bump. (jp)

Last Call

Last calls are issued once everyone seems satisfied with the current XEP status. After the Council decides whether the XEP seems ready, the XMPP Editor issues a Last Call for comments. The feedback gathered during the Last Call help improving the XEP before returning it to the Council for advancement to Stable.

- No Last Call this month.

Stable

- No XEPs advanced to Stable this month.

Deprecated

- No XEP deprecated this month.

Call for Experience

A Call For Experience - like a Last Call, is an explicit call for comments, but in this case it’s mostly directed at people who’ve implemented, and ideally deployed, the specification. The Council then votes to move it to Final.

- No Call for Experience this month.

Spread the news!

Please share the news on other networks:

Subscribe to the monthly XMPP newsletter

SubscribeAlso check out our RSS Feed !

Looking for job offers or want to hire a professional consultant for your XMPP project? Visit our XMPP job board .

Help us to build the newsletter

This XMPP Newsletter is produced collaboratively by the XMPP community. Therefore, we would like to thank Adrien Bourmault (neox), anubis, Anoxinon e.V., Benoît Sibaud, cpm, daimonduff, emus, Ludovic Bocquet, Licaon_Kter, mathieui, MattJ, nicfab, Pierre Jarillon, Ppjet6, Sam Whited, singpolyma, TheCoffeMaker, wurstsalat, Zash for their support and help in creation, review, translation and deployment. Many thanks to all contributors and their continuous support!

Each month’s newsletter issue is drafted in this simple pad . At the end of each month, the pad’s content is merged into the XSF Github repository . We are always happy to welcome contributors. Do not hesitate to join the discussion in our Comm-Team group chat (MUC) and thereby help us sustain this as a community effort. You have a project and want to spread the news? Please consider sharing your news or events here, and promote it to a large audience.

Tasks we do on a regular basis:

- gathering news in the XMPP universe

- short summaries of news and events

- summary of the monthly communication on extensions (XEPs)

- review of the newsletter draft

- preparation of media images

- translations

License

This newsletter is published under CC BY-SA license .